👂sounds like: Bangalore, traffic, silence, AI, plankton, hippo, whales

from the rivers to the ocean

Hello friends. I’ve been in Bangalore for the last ten days for FutureFantastic, a festival of AI art, presenting a piece I worked on called Asthir Gehrayee with a fantastic team of artists.

Read on for all my latest on…

🇮🇳 Bangalore

🤖FutureFantastic/AI art

🕴Frank Sidenote

🌊 Asthir Gehrayee

🎤 Climateprov

🎮 Only A Game

📆 Upcoming events

📝 Quickfire Reviews

💻 Nerd Corner

🇮🇳 Bangalore

One of my favourite lyrics is from a song called ‘From The Rivers To The Ocean’ by Bill Callahan, the artist formerly known as Smog:

Well, I can tell you about the river

Or we could just get in

I think ‘just get in the river’ is good advice for a lot of situations. In the interests of just getting in the river, I did very little research about Bangalore before travelling there. I’d been told repeatedly it is a chaotic city, but I wasn’t sure what this meant. On my first morning there, picturing myself as this generation’s Anthony Bourdain, I asked the man at the front desk of my hotel where I should go for breakfast.

He tilted his head left and right. ‘Umesh Refreshments. Over there where the red car is.’ He pointed across the traffic. ‘Very good Indian food. Lots of locals go there’

I thanked him and left the hotel. I found out straight away what chaotic meant. Cars, motorbikes and auto-rickshaws sped past on 100 Feet Road in front of me. At the same time, beautiful trees and plants grew everywhere along the street and through the pavement, as they do across most of the city.

I was reminded of this interview with American composer and sound artist John Cage talking about traffic:

The sound experience which I prefer to all others is the experience of silence. And the silence almost everywhere in the world now is traffic. If you listen to Beethoven, or to Mozart, you see that they’re always the same. But if you listen to traffic, you see it’s always different.

I stood on the edge of the road for a long time trying to work out how to cross. Earlier in the same interview (above), John Cage says:

When I talk about music, it finally comes to people’s minds that I’m talking about sound that doesn’t mean anything. Or is not inner, but is just outer. And they say–these people, who understand that finally–they say, ‘you mean it’s just sounds?!’, thinking that for something to just be a sound is to be useless.

Whereas I love sounds, just as they are. And I have no need for them to be anything more than what they are. I don’t want them to be psychological, or for a sound to pretend that it’s a bucket, or that it’s a president, or that it’s in love with another sound. I just want it to be a sound.

I imagined him striding out into the road, blissfully ignorant of the meaning of the traffic, saying to himself ‘I love silence. I have no need for this traffic noise to preten–’ and then being mowed down by an auto-rickshaw.

An austere looking old Indian man stopped as he walked past, and gave made a hard, confusing stare. He looked pointedly at my knees. I looked down at my shorts and remembered vaguely something I had been told about having bare legs being considered inappropriate. Another line from the lyrics of ‘From The Rivers To The Ocean’ is ‘have faith in wordless knowledge’. With this in mind, I took faith in the wordless knowledge I had completely imagined this man to be trying to communicate to me telepathically and went back inside to change. Outside again, after a long time staring at the road, I went to Umesh Refreshments and looked at the menu from the street outside. I realised I had no idea what the menu said, and much less what an appropriate thing to order for breakfast would be. I left with my tail between my legs and ate at the hotel.

I am pleased to report I later found out the shorts thing isn’t actually something anyone cares about, I had some excellent breakfasts with the help of suggestions from those more in the know, and I became perhaps a little too confident crossing the road. A better lyric might be ‘I can tell you about the river, or you could just get in. On the other hand, it’s often better to do basic research about the river first. At least look up what you should get for breakfast in the river’.

🤖FutureFantastic/AI art

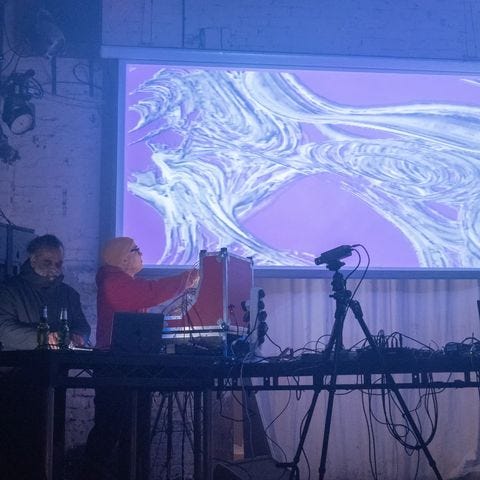

I was in Bangalore for Future Everything and BeFantastic’s FutureFantastic Festival, installing a piece I’d collaborated on with four other artists called Asthir Gehrayee. It was an absolute joy (apart from the expected stress of installing) to work with the Asthir Gehrayee team installing our piece, and you can see more of their work below:

The festival covered two themes – AI and climate change. AI is a word that is used to mean a range of things. Sometimes it’s used to mean ‘getting a computer to do something instead of a human’. However, under that definition, a washing machine is an AI. A self checkout is an AI. Another definition, the one I’m using here, is a computer that uses machine learning. This is a process in which a computer is fed a large amount of data, learns to recognise what all that data has in common, and then uses the connections between the data to generate its own outputs.

This field is moving very quickly and often in unexpected and unusual directions. One of the most interesting things about the festival itself was seeing how artists are responding to and working with all these new ideas and different directions.

Irini pointed out during the festival that AI models like ChatGPT, Midjourney and Dall-E–the most widespread and commonly used AI tools at the moment–are best thought of as carefully curated and packaged consumer products. They are trained on huge, huge amounts of data scraped from the internet, giving no credit to the data’s original source or author. What the user interacts with is a carefully refined, tweaked, and often, very sanitised expression of the work of potentially millions of other people. For a lot of the machine learning work that really interests me, artists have collected their own datasets, trained a machine to work with and recognise the things in that dataset, and made their work using that.

For example, for Irini’s piece ‘As Uncanny As a Body’, she trained an AI model on images of dancing figures, and then used the model to create a dancer that morphs between different states:

Many of the pieces in the festival used similar techniques. ‘<A Synthetic Song Beyond the Sea>’ by Unhappy Circuit, used a machine learning model he trained on both human voices and whale songs. This resulted in a piece that sounds somewhere between the two, a hybrid whale-human singing:

One of my favourite pieces from the festival was “Human in the Loop (wip)” by choreographer Nicole Seiler. For the last ten years she’s been working with text instructions for dance, meaning she has ten years of data to feed a machine learning model with. She trained a dataset to generate new instructions on its own, which was the idea behind the piece which closed the festival on Saturday night. Two dancers wore headphones, and the AI model generated instructions for the dancers live, telling them what to do using a text-to-speech model in real time. At times the instructions were audible to the audience, at times we had no idea what the performers were being told by the AI. Here is a very brief clip:

The results are so interesting to see interpreted in real time by the dancers, especially when the AI generates unexpected or impossible instructions.

A theme I heard repeatedly through the festival is that as mainstream AI products are developing and becoming more and more sanitised, they’re becoming less interesting and less fun. AI users and developers broadly fall at a point along a spectrum with two poles:

Use AI to make things more efficient, faster, cleaner, better automated, easier to use

Use AI to make things that are bizarre, interesting, say something about the dataset it’s trained on, and/or say something about how we interact with computers and each other

The more AI is road-tested and developed, the more it leans towards the former.

BOOOOOORING

Bjorn Lengers from CyberRäuber told me that their piece The Merge–a live-streamed performance around Bangalore, in which performers read out instructions generated live by AI for twelve hours–was using GPT-3, an older version of the model ChatGPT runs on, rather than the newer, slicker GPT-4, because the new ones are just less likely to give bizarre, fun and interesting responses.

Another common topic of conversation about AI is whether it will steal all of our jobs, making creative work redundant or worthless. Upasana Nattoji Roy, presenting during the festival about her work, told the audience that when she works with her design team, AI is a useful tool for prototyping, quickly coming up with iterations of designs, and opening up discussions.

In this interview with Simpsons writer John Swartzwelder, he says:

I always write my scripts all the way through as fast as I can, the first day, if possible, putting in crap jokes and pattern dialogue — “Homer, I don’t want you to do that.” “Then I won’t do it.” Then the next day, when I get up, the script’s been written. It’s lousy, but it’s a script. The hard part is done.

The optimist in me thinks AI is very useful for getting together a first draft very, very quickly, and then using human creativity to redraft it.

The pessimist in me says the huge corporations in control of the development and distribution of mainstream AI products don’t have human creativity as a priority, and are only concerned with the efficiency, faster, cleaner, better automated, lower labour costs, much more profitable side of things.

Most of the time my pessimistic side wins out. Then again, who knows where AI will be a year from now. My guess is that we are maximum four months before a cult forms worshipping a machine learning model as a sentient prophet, mistaking its convincing imitation of sentience for real sentience, and maximum a year from then, they’ll make Roko’s Basilisk happen. I hope at the very least they run their cult on a weird enough model for us to go through a fun quirky apocalypse rather than an efficient, streamlined, boring one.

🕴Frank Sidenote

I quietly found the name of the festival very funny because it reminded me of Greater Mancunian cult comedy legend Frank Sidebottom, who had a habit of calling anything and everything ‘fantastic’, and I enjoyed imagining Frank Sidebottom strolling round the exhibition space with his massive fibreglass head.

I had similar thoughts at university when we were studying Symphonie fantastique by Berlioz, who I pictured wearing a massive fibreglass head, shouting to his orchestra, ‘My new symphony is REALLY fantastic, you’re all going to proper like it’

Anyway,

🌊 Asthir Gehrayee

Our installation was all about plankton. Plankton are very important for the climate, and are responsible for removing 50% of the world’s carbon dioxide from the atmosphere, and our piece took this as a starting point.

Plankton move very slowly through the water, twisting like a corkscrew. For a human to experience what it is like to move as a plankton, they would need to be in a liquid the same density as peanut butter due to our much bigger size. We asked audience members to imagine they are moving through peanut butter and move their bodies in front of our wall of plankton. The plankton more or less ignore the audience, unless the audience move together, as if through peanut butter. Only then do the visuals and the sound of the piece come alive.

We used AI in two elements of the piece–to track people’s faces, and also to generate visuals for an introductory video shown to audience members as they entered the installation space.

If you want to download the Max code and poke around inside, scroll down to Nerd Corner at the bottom.

🎤 Climateprov

I was absolutely overjoyed to be asked by Blessin Varkey and his team to join them for two performances of their project Climateprov, an improvised comedy show in the classic improv comedy tradition (e.g. Whose Line Is It Anyway?) with two angles:

All prompts were themed around climate change

The improvisers took suggestions from both the audience and an AI model, which generated scenes and suggestions live

I played acoustic guitar and improvised scores for some of the scenes, and also underscored the classic improv framework Irish Drinking Song (with live-generated AI lyrics).

This was some of the most fun I’ve had performing–having to think on my feet to respond to think of the right chords to play for things being shouted out by both the audience AND the AI was a great challenge.

We’re currently looking for more places to perform the show, so if this sounds like something you want to see happen where-ever you’re reading this, please get in touch.

🎮 Only A Game

I also wrote the music for one of the other pieces in the festival, Only A Game. This was a game installation that used motion tracking–audience members had to perform a series of moves in the right order. As time goes on, on-screen representations of natural disasters get worse and worse, unless they perform the moves correctly.

This is another one we’re hoping to develop, so stay tuned for more.

Huge thanks to FutureEverything and BeFantastic, and all the other artists for being so great and supportive.

Here are some things happening in the future which I hope will be fantastic:

📆 Upcoming events

🦛 Hippo

📍 Fuel Cafe Bar, Manchester

📆 April 21st

Hippo are a crackin Manchester indie pop band and I’m joining them as a bonus player on top of their already crackin 5-piece lineup. Check em out here:

📽 Test Card

📍Not sure yet but save the date if you like, somewhere in Manchester

📆 May 17th

Test Card is a digital art/music/live visuals event. I’m playing a set along with the fantastic Heron. Expect bleeps and bloops.

📝 Quickfire Reviews from January and March

🍩 The cinnamon rolls from HOME in Manchester: god they’re good

🍛 Nagarjuna in Bangalore: the best place I ate in Bangalore. They just kept bringing us rice! But I was already so full! But I kept eating!

🎬 Annihilation: broadly thought this was very good, some incredible visuals in there

🎬 Bill & Ted Face The Music: Bill & Ted’s Excellent Adventure was my favourite film growing up, and I was so pleased to see the third installation did a great job of updating the situation

📖 Strong Female Character by Fern Brady: Fern Brady’s memoir focusing on her late-diagnosed autism is so interesting, funny and informative

📺 The Sopranos: I know this was on the list last time but I finished it and it really is as good as everyone says

📺 The Girl Chewing Gum: I stumbled on this short weird film from 1976 by accident again recently–it’s only 12 minutes long and it’s dead good. You may as well just go watch it now

p.s. if you aren’t subscribed already:

Thanks for reading all the way to here, the end. If you have any thoughts, comments, suggestions, please do get in touch in the comments section or by replying to this email.

If you have any questions about music – as broad or specific as you like–get in touch and I’ll try to answer them next time I email out Agony Aunt style.

Okay thanks bye for now!

…

…

…

…

I think they’re gone

🤖 Nerd Corner

Oh thank god. Finally we are alone. It’s just me and you now nerds. We can talk about the only thing that matters, the thing that makes the world goes round, brings joy to our hearts and peace to our minds: code

Here’s the user interface for the project:

You can download all the Max code from Github here:

https://github.com/dvd-mcf/asthir-gehrayee-substack

There isn’t space in this email to explain it all, but long story short, I used the [jit.cv] package in Max to track faces, measured the velocity of the faces, then used the speed, position and number of faces data to control synthesisers and generate the sound. We used Timo Hoogland’s n4m-p5 websocket code to send the position and speed data of each face to p5.js. Irini’s p5.js code then used that to affect the visuals in real time.

If you have any questions get in touch!

Okay here’s the actual end, byyyyyye

Here’s a bonus song for the nerds: